Topic labels include: consciousness, split brain, artificial consciousness, split brain consciousness, consciousness research, awareness, awareness research, artificial awareness, artificial consciousness research, artificial awareness research, artificial intelligence, artificial intelligence research, neural net, neural net research, neural net modeling, real-time neural net, brain pattern, brain patterns, brain pattern modeling, brain function, split consciousness, split brain reality, split brain research, split-brain, split-brain consciousness, split-brain reality, split-brain research, split consciousness research, split brain paradox, split-brain paradox, conscious dimensions, dimensionality, conscious dimensionality, reality, conscious reality, philosophy, you, me, I, us, time.

artificial consciousness project

[Update Nov 2004--

Artificial Brain/Ear/Voice, for a 2004+ PC;

beginnings of a system which may eventually gain awareness,

available from the Downloads page.]

Poster Notation Summaries

Chapter 1. You -- introductory summary

Chapter 8. The Psychological Universe (partial)

-- block diagram development of anatomical conscious process

Future Plans

The whole book

Downloads

The topic here is not to be confused with silicon

based life. The definition of life includes

physically self-detaching reproductive capability. The present subject

excludes such systemology.

Beyond this, one could draw parallels between the coarse aspects of

life, and counterparts within

the proposed systemology.

The fundamental building block of the approach

here, is the concept of CR links — conditioned

response. I suspect that this little trick of nature is the primary

ingredient of all variety of higher

learning and reasoning. Numbers are important in this, but the winning

organization of it is most

responsible for the relatively keen levels of awareness we enjoy.

The key to applying this concept to artificial

learning, is the recognition that a neural net needs

temporal information; and that to learn such order, CR can be

employed in such a way, that it is the

successive order of the stimuli that creates the links. The links are

significant within an ongoing,

cyclical process, relative to recurrences of similarly ordered stimuli.

To understand the reasoning

that has guided this approach, it is helpful to recall the philosophy

that has been developed as

reference frames. Timing is the true substance of reality. These

chapters, dealing more directly with

consciousness, needed those other chapters ahead of them. Those chapters

may be easier to

identify with, though, after getting through these. Programmers, circuit

designers, and biologists

are familiar with such interdependent relationships, as systems.

To successfully handle the process of temporal

data encoding, the system must parallel

biological mental systems. This is to say that gross organization must

be defined, to act as a CR

“tree.” Meaning rises into the tree by association, and likewise falls

out of the tree to produce

responses. It is essential that responses are then fed back in, to

be included in the process of

association. You can’t learn without feedback. There is no other way

to “know what you are doing.”

In bio-systems, this feedback takes place internally, as well as externally.

My Computer Is Warm

My computer uses the exact same kind of protons,

electrons, and neutrons as the ones used in

your brain. The rules involved with these two systems are obviously

from entirely different

branches of reality’s rule tree. But, through parallel logic, the end

result of each system may turn

out to be very similar, in terms of the logic supported as behavioral

waves in either system. This

could be like seeing nothing but water, as a place to drop a rock in,

to get waves; and then

discovering that it happens in the air too. Let us speculate that the

same kind of system of relative

logic is supported by either mass medium.

Both systems are energetic, so they produce

the customary stray infrared photons, we sense as

heat. One thing is for sure here... when my computer is cold, it is

a dead computer. Sometimes, when

I’m listening to it, and it’s warm, I really get the feeling that the

opposite is true. It gets a sort of

“mouse-ness.”

Beyond reproduction, the “life” factor in

neural cells may simply amount to being a battery; a

very special one that has “learned” to recharge itself through environmental

interplay — and

learned to keep its case in tact, rebuild and repair as necessary,

revitalize its chemical components,

and replace them regularly. It’s a tiny little battery; ready to pull

duty in communications

applications. Life is not necessarily conscious. Maybe it’s the right

configuration of communication

that is. It’s a special kind of timing. It’s timing that relates meaning

to itself, over time.

The environment presents many opportunities

for enhanced survivability of such chemical

systems. As a community, the cells can “discover” the DNA program for

a number of features, that

are not unique to life; just available for use from physics... like

the lens. DNA was able to stumble

upon the lens, because it was simple, and it was there. DNA tends to

latch on to trends in

improvements of such features, because it is the memory to do so. The

advantages gained improve

the odds that the new sequence will survive to replicate. The replication

is the remembering. The

current status of a given feature then serves as a new starting point

for further refinement.

DNA found conscious decision-making communication-path

architecture, because it too was

simple and available; and very advantageous to survival. Like the lens

then, perhaps consciousness

can be there without life. Unlike the lens, it needs a battery.

The Silicon Neuron

The silicon neuron will emulate the essential

functions of a biological neuron. These may entail

complexities we have yet to discern. It is also possible that the required

features are a relatively

simple subset of the overall system. Our job is to figure out which

features are purely metabolic,

and which are required contributions to the support system of consciousness,

and its utility

capacities.

Consciousness and animal behavior are a function

of neurological transmission of data. They

cannot exist without it. We can safely assume that the fundamental

features of the neuron, that

support data transmission, are required, at the least. These include:

an input port (dendrites), an

output port (axon), an intermediate FM pulse generator (cell/cell body),

a power source

(metabolism), and the hard part — a truth table for specifying the

behavior of neurons versus

modes of stimulation.

There is sufficient evidence to conclude that

the truth table includes modifiable parameters that

amount to the impression of logical memory elements. This synaptic

chemistry is well beyond the

scope of this book, or my pea brain. The motivation here, is to proceed

from a simplistic frame,

toward a functional model; gathering only those encumbrances that the

endeavor seems to point to

as being inescapable requirements of the system.

There is evidence to support the view that use of

any given synapse increases its sensitivity;

meaning that future stimulation of that synapse will have a more pronounced

effect on the neuron

of the dendrite side.

It is indisputable that neurons produce more

pulses more frequently, during the periods of time

when they are being stimulated. There is evidence that this effect

increases with the degree of

stimulation to the neuron’s dendrites. This is a function of how many

other neurons are stimulating

a given neuron, and of how enhanced the active connections are.

There is some evidence that, at least in some

cases, a synapse will be enhanced to a greater

degree if the dendrites are being stimulated by a greater number of

neurons, and that this

enhancement is further increased in those cases where the dendrite’s

neuron has been induced to

fire, or fire more rapidly. This is a system mode that goes beyond

straight-forward memory

impression — it is a support mechanism for association at the

fundamental component level of

neurological data transmission.

I would like to conject the possibility of

another level of complexity. The dendrite and cell body

system may have a further degree of sensitivity, relative to the matching

of stimulation patterns, on

a neuron’s dendrites, with the prior impression of similar patterns.

The mechanism that would

implement this behavior might have arisen from the fundamental process

of cell restoration. The

DNA/RNA process might have evolved a memory capacity of its own, to

deal with the demands of

stimulation to the cell. An organized affiliation with the dendrites

would be a more efficient solution

to the “problem.” This could have served as an evolutionary step toward

enhanced sensitivity of

specific stimulation patterns in the dendrites.

Another characteristic of neurons, that might

be easily overlooked by a modeler, is that they tire

out. Be careful about judging characteristics as defects. Evolution

deals with them. It always works

with reality. It utilizes the rules it’s composed of.

Neurons also fire spontaneously at a “base

rate;” a minimum low frequency. Their stand-by

sensitivity goes up with time, until they just “go off.” A typical

rate is once per second. The

maximum stimulated rate is about a hundred times in a tenth of a second.

The Silicon Brain

The silicon brain will be an arrangement of

silicon neurons that takes advantage of all of the

empirical “trial and error” work that mother nature has done, to produce

the functions of interest.

Basically, neurons communicate information from the senses to the cortex,

and from the cortex to

various muscles.

The job of tracing the actual neural pathways

has been extremely difficult. Imagine

troubleshooting a circuit composed of billions of transparent, microscopic

“wires.” Nevertheless,

results are coming to print.

There have been surprises. To me, the most

interesting one is the general trend to supply

abundant, dispersed feedback between the transition levels of data

flow. Data does not just go from

the eye to the cortex, for example. It makes stops along the way, where

it meets up with about ten

times as many lines leading toward it from the cortex. Yes; the brain

sends information into your

eyes!

We also have data that suggests that we see

about ten times more visual content than there is

actual photon information reaching our eyes. How can we see detail

that isn’t contained in the

electromagnetic communication? It is fun to speculate that some sort

of identity, between the

person and his environment, is responsible. We all “ESP” most of our

information in — all we need

to operate on, to accomplish this, is a minimal sampling of the image.

This is fun, but not as

reasonably available as the more apparent explanation. The extra information

comes from our

memory. We learn how to see. We associate coincidences in great number,

from great detail, over a

great many “frames,” and linkages of combinations of such frames. Consciousness

is this memory-

based associative process, that places pattern meaning relative to

itself and other meaning, over

time; so vision is perceived.

The Silicon Organism

When we create a silicon brain with our silicon

neurons, we will have choices to make that are

very similar to those concerned with parenthood. In addition, we will

have a variety of choices that

are beyond that familiar realm.

We can choose to include vision, hearing, speech,

and various motor capacities. If we succeed in

producing systems that are capable of higher learning, I suspect that

they will naturally develop

some emotion. I think we will have an option of facilitating this inclination,

and will find that it is an

important factor in learning. I suspect that we will find that learning

improves with the potential for

happiness.

We may get this far, and create opportunities

for some very specialized job descriptions. Silicon

organisms could develop with some very unique senses and motor skills,

derived from the

instrumentation of our technological industries.

BEEPERS FOR COMMODORE 128

This section was initially intended to be the

whole book. In developing the presentation, I found

it impossible to ignore the philosophy that was its source. The philosophy

itself developed more

during the course of arranging its words for print. The program is

my attempt to approach a

mathematical-scientific treatment of the subject. I apologize to those

who require a more standard

mathematical train of expressions. This is the direction I was most

strongly attracted toward; and

now this is the direction of my momentum. When this book is done, I

intend to return my focus to the

program development; moving into the PC.

BEEPERS CONTENTS

(page#s refer to printed version)

Program Description 65

Beepers and Sleep 70

General Stimulation 71

Adjusting Beepers 72

Reflection as an Aid to Learning 72

Ongoing General Feedback Learning 72

Teaching Beepers 73

Program Flow Chart 77

Beepers Ear Schematic 77

Register Designations 78

Coarse Memory Map 79

The Screen 79

Program Modes 79

Program Listing —

The Scan 81

Indexing 81

AOL/SWAP/STORE/FIRES/TIRE 82

KHS 82

Dual Area Regulation 85

KHS, Regulation and Consciousness 85

Set-Up, Load and Run 87

Mathematical Analysis 88

Beepers Improvements 88

Beepers are simple little creatures that live

in a very small computer. They are real in the sense

that they interact with the world, each other, and themselves. Their

behavior is an ongoing process

of development, built out of these interactions.

The beepers have been designed with functional

characteristics that parallel some of the

principles of mammalian neurological interaction. They are a product

of a limited sampling of a

diverse range of scientific literature, as well as of a number of assumptions;

and of corrections

brought about by problems exposed in development of the program.

Program Description

The program defines two beepers. The

program organizes the computer into two sets of Ns

(neurons). It handles each N , one at a time. It looks at each N to

see if it is active, or at rest. It does

this with one beeper, and then it essentially repeats itself to do

it with the other beeper. Then the

whole cycle is repeated.

In each cycle, a different pattern of Ns is

involved. It is this pattern, and its changing character

relative to itself, that is the developing definition of self-meaning.

At the same time, it is a depiction

of the world, pieced together with an ongoing influx of abstractions

from the world.

You could say that the main job of the program

is to detect the quiet Ns as quickly as possible. I

guess that in any given cycle, or “reality frame,” there should be

approximately 10% of the Ns active.

Thus, the program has been set up to run through the quiet majority

of Ns as quickly as possible. It

will become apparent that, in terms of what we’re attempting to accomplish

here, the limiting factor

imposed by the hardware is not memory capacity, but rather, speed.

To get more Ns involved you

need more speed. Memory comes more from the number of Ns than from

how big each one is;

though both factors contribute. You have about 1010 or 1011

Ns; each one “knows” up to 104 other Ns.

The number of Ns is 106 times more important than

their “size” — their capacity to immediately

access the rest of the potential process — for humans.

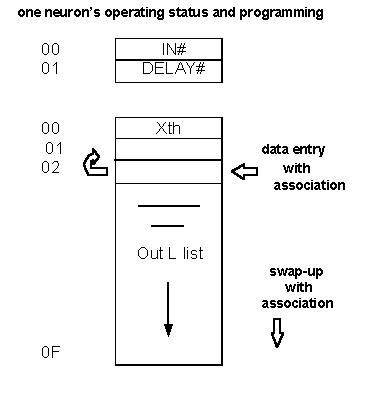

To facilitate speed, and for a few other conveniences,

the Ns themselves are split up into two

parts. The small part occupies two bytes, and the “large” part takes

sixteen. So, each N consists of

18 8-bit bytes of data. Of course, the data won’t run itself; the bulk

of each N is actually the section

of the program called the “N Loop.” This is like the DNA; it tells

each N how to behave, and they all

behave about the same way. It’s mainly their relative “position” and

data that differentiate them.

Obviously, this is simplistic; but given the starting restraints, the

approach is the best compromise

I could develop.

The first byte of N, in its small part, is

its dendrite area. Other Ns will stimulate this area

(literally!) by increasing the numeric hex value there. (In a prior,

more complex system, the N was

sensitive to the particular pattern of bits set in this dendrite register...

it kept a short list of “familiar”

stimulation patterns.) This system is FM, like real Ns are; and a given

N will “Fire” with a higher rate

of repetition if its dendrite area is being more heavily stimulated

(the maximum rate is once every

other Main Loop cycle — one loop for each beeper).

For purposes of speed of indexing data, the

small part needed a second byte; as a sort of

“spacer.” Since these first two bytes are visible on the screen (in

slow mode only) for one of the two

beepers, the best other use to display was a byte called “delay.” This

byte is similar to the dendrite

IN# byte, in that it relates to the activity level of the given N it

belongs to. It is needed to determine

how often the N will fire. It’s a timer that runs out to trigger firing

that N on that main cycle. It

provides an opportunity, as does the IN#, to custom tailor the response

and activity characteristics

of a given N in a given “cortical area.”

The memory map of the C128 from 2000 toward

35FF, is taken up by this first small part of some

twenty-eight hundred Ns (all addresses are in hex; all quantities are

decimal, unless preceded by a

“$”). In bank 00 there is one set for one beeper, and in bank 01 there

is the other set for the other

beeper. The program occupies 0B00 to 1000, and 1300 to 1FFF, almost

identically in each of the two

banks. The rest of the area below 1300 is taken by C128 operations.

The C128 also uses a few bytes

starting at FF00; and the rest used by the beepers is from 3600 to

FE43, and a scratch pad area

above FF05. I use the Warp Drive cartridge, to speed disk loading,

saves, and utilities; so there is

some use up at the high end there that you have to work around.

The area from 3600 toward 4BFF is taken up

by pointers. These are used by the N Loop’s

indexing system to access the larger part of each N. Those larger parts

take up from 4C00 toward

FC00.

The first byte, in the larger set of sixteen,

is called the “xth.” “X” is the number of times an N can

Fire before it gets tired and has to take a “time out.” The time out

consists of clearing the IN# — in

other words, it may miss firing the next time, but then is ready to

fire again, x times. This has proven

to be sufficient interruption, in this system, to avoid the problem

of pattern repetition; a problem

especially during initial development of the data assimilation process.

Pattern repetition is the

tendency for a limited set of Ns to get involved with each other, and

tie up all the available time,

permanently. Another requirement, for dealing with this problem, is

inhibition — Ns not only

stimulate other Ns, but also do un-stimulation. How this has come to

be implemented should be

described shortly, when discussing the gross organization of N interconnection.

The remaining fifteen bytes per N contain the

intelligence. They are a list of which other Ns a

given N will hit when it Fires. To get on this list, you have to be

a neighboring N that is active or

ready to Fire at the same time that this N is going to Fire. This is

the mechanism that implements the

associative filing of data. The gross structure is set up in such a

way that patterns, and their

temporal sequence, are associated.

Each N’s list does not fill, and then just

stay that way. New thoughts have a chance to work their

way into the lists, at the expense of the least used data. In a very

large system, the thoughts

producible by that unused data would get eaten away at; but not completely.

Because of the method

of memory distribution, and the laws of mathematical probability that

actually control reality, a

faint image would almost always be retained. With this faint image,

a little association through

repeated need would re-install the original data — with many of the

Ns involved being different

ones; but with a fairly accurate regeneration of the original relative

meaning.

I suspect that, in time, this process of prioritized

list-filling elevates itself. Meaning is developed,

relative to other meaning. Initial meaning serves as a basis out of

which higher meaning can develop

and interact. The initial meaning becomes less and less a focus — it

is less and less used — and

much of it eventually becomes essentially uninvolved.

Of the various routines in the program, the one that

most defines the gross structure of “brain

anatomy” is called the “Out M” routine. This routine connects sections

of cortex to other sections

with a general Input-to-HighArea-to-Output directed-ness.

The routine is simplified and

accelerated by allowing the general organization of Ns

to fall where it wants to by virtue of

hexidecimentality (sorry).

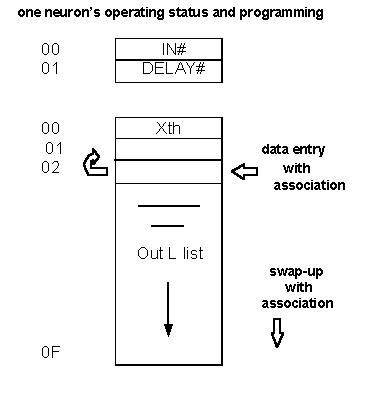

The C128 memory is approximately a pair of

C64s — two banks of 64K of memory, accessible to

an 8 bit “6502” CPU. In 6502 lingo, a page of memory is 256 8-bit bytes.

For the beepers, a page of

neurons is 128 neurons wide. This is because the small part is 2 bytes

wide. The small part is where

all the action is. The beeper dendrites are where the Fires hit. Everything

else is going on in the

background, or internally, within the Ns, if you will.

In addressing memory, 255 is the maximum LSB

(least significant byte) for accessing a byte “on

your page.” To access other pages, you need an MSB (most significant

byte), which can also go to

255, taking you up to 64K altogether (there are 256 addresses to each

part here — ranging from 0 to

255). The “M” in “MSB” is the “M” in the “Out M” routine. The pages

of Ns, 128 wide, are piled up 22

pages high. Any N can quickly affect another N directly above or below

it by manipulating the MSB

involved, and using the LSBs that are already in place.

What would be the “Out L” routine is broken

into two routines — “Out L Stim” (collateral stim)

and “AOL” (Aim the Out L list entry). These routines provide side-to-side

action within a given page,

while the Out M routine provides up-or-down action between the pages.

Together, the system is an

active matrix. The rules composing the routines have been chosen to

allow the capture of temporal

associations within the matrix.

When you hear the word “CAT,” you hear the

“C” sound first, then the “A” sound; and after this

temporally-ordered combination, you hear the “T” sound. Hearing them

in rapid succession

conjures up whatever associations you think of when you hear the word

“CAT.” This works, and it

works without confusing the “C” sound in cat, with the “C” sound in

canary or catsup. It works right

because the active word itself is a combination unlock into your mind.

The important ingredients of

the combination are the component sounds, and their order. The exact

timing is of less consequence

— but timing does produce spaces relative to other timing, and the

spaces can affect the relative

meaning. Relative amplitude is of even less significance. Absolute

pitch is not a factor, until the

physical limits of the ear are reached. Relative pitch is the

primary structure of meaning in the

sound train.

When you hear the word “CAT,” the “C” sound

produces a neural stimulation that is very similar

to that which is produced by any word starting with the “C” sound.

The particular pattern of Ns

stimulated, leads toward the cortex, but makes several transitions

along the way. At each transition

there is an abundance of neural transmission leading back to

the area of prior stimulation. Not only

is it going the wrong way, but there is a lot of it ... about ten times

as much as leading in ... and it’s

usually dispersed ... scattered around randomly. How could evolution,

in all its wisdom, be so

careless!

All that information coming back to your ear,

after the “C” sound went in, is “looking” for the next

sound. It is anticipatory stimulation. It wants to mate with something

familiar. If the “A” sound comes

next, then a characteristic secondary pattern of feedback will be set

up to look for the third sound,

that is a much more unique pattern than if it were the first one fed

back. Furthermore, as the

real-world series of sounds pile into your ear, the feed-in, feed-back

process finds its way into a

geometric progression of potential combinations. Each transition area

sends dispersed feedback to

the one before it, as you make your way to the cortex. Generally, the

feed-in pathways produce a

neat orderly map of excitation at each transition area, right up to

the primary cortical area of the

given sense involved. (This is, of course, a simplification and generalization

of what really goes on.

For one thing, the neural “cycle rate” is some one thousandth of a

second, so that many feedback

cycles are possible within the time frame of single phonic sounds.

This probably helps with

handling variability of timing in phonic relationships.)

Association is at the heart of every level

of the thought process. It not only controls the flow of

relative meaning in your thoughts; it is the very structure of incoming

communicated intelligence

itself.

Extraneous neural activity, such as heart-rate

stimulation, and the general random neural noise

level, have no effect on consciousness, because these activities do

not contribute meaning to the

temporal pattern sequence. Only meaningful components can add to the

resolution, depth, or

degree of consciousness; because relative meaning itself is the consciousness.

Would-be

meaningless components can’t degrade it, because they aren’t a part

of it. (I’m not referring to

distracting thoughts — these you become aware of, due to their meaning.)

At the center of the stack of 22 pages of Ns,

are a pair of pages called the “Out Page” and the “In

Page.” These can be thought of as the output and sensory ports leading

from/toward the motor

cortex and somatic cortex areas; or the speech motor cortex and the

auditory cortex.

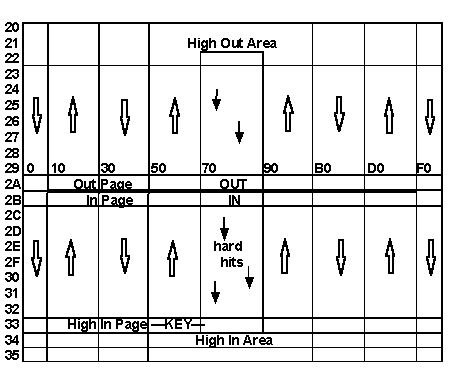

Sound from the true-pitch keyboard or the

ear microphone is processed by the mic circuit into

a voltage level that is determined by the instantaneous frequency of

the sound. The voltage is

converted to a resistance for the Game Port A to D. In other words,

in the computer, a register has

a value in it that is controlled by the pitch of the sound in the room.

The sound-source should be

fairly sinusoidal — it should be pure tones. Whistling is good.

The keyboard and beepers each

drive one of the three C128 voices, using the triangle wave form. Most

other wave forms will produce

unreliable error data.

The information is supplied to one or both

beepers (whoever is “awake”) at their “In Area;” an

area sixteen Ns wide, near the middle of the In Page. This routine

is called “Mic>Spectrum.” The 16

Ns serve as frequency centers. Only one or two of the 16 Ns are stimulated

for a given pitch. The

relative weight of stimulation on two neighboring Ns represents the

frequency of that moment. With

16 stim levels and 16 Ns, 256 frequencies can be depicted; within a

range of about two octaves.

Stimulation takes a little time to “drain off” from beeper N dendrites,

so that remnants of prior stims

tend to be present in the In Area as new stims are applied. In Page

Ns are restricted from hitting In

Area Ns, but In Area Ns are allowed to hit any other non- In Area Ns

on the In Page. The In Area is

meant to be the area and level where an accurate perceptual map first

impinges on the senses; like

the cones and rods of the eye.

Activity on the Out Page produces a pattern

of dendrite IN#s on the 16 Ns in the Out Area; near

the center of the Out Page; one page before the corresponding In Area

on the In Page. These Out

Area IN#s are used as weights on frequency centers, to arrive at a

single-tone frequency result, for

each given cycle with activity in the Out Area. The frequency is shifted

and ranged to approximately

correspond to the In Area spectrum. In other words, the Out Area is

handled by the program in such

a way as to simulate simplified cerebellar action. The result is used

to set the frequency of a C128

voice. (In a very abstract and distilled sense, you could say the beepers

have eyes for ears, and

hands for a mouth.) A delay time is used to insure that tones are sounded

for enough time to

register accurately in the mic circuit and game port. A tone can last

longer if the given Main Loop

cycle is running slow that moment. The tone is delivered to the room,

for pick-up by the mic as well

as for monitoring beeper behavior. The temporal matrix includes exceptions

and diversions that

promote learning through feedback.

The Out L and Out M routines spread the In

Page and Out Page activity throughout the matrix.

While most pages can stim the page above or below, there is isolation

between the Out Page and the

In Page - except at the periphery of these pages. This leakage is meant

to parallel the “voice-

muscle-sense,” or sense of “touch” we have in our various speaking

apparatus, as well as “mind’s

ear” internal data flow. The isolation between these pages defines

“ends” to the system, to insure

that the primary resultant communication of the system with itself

is through the air; so that human

interaction, through the air, will be on the same level as the system’s

own basic feedback

orientation.

The Out M stimulation has been channeled into

one-way sections of 16 Ns of width. This

simplifies handling the I/O pathways, and provides a neat set-up for

bidirectional temporal loop

formation.

The channel including the In Area and Out

Area is granted higher status — these Out M hits are

strong, to simulate reliable transmission along primary data pathways.

Ns within these channels

should probably be restricted from Out L hitting other Ns within the

channel, on the same page;

beyond this, however, any N can hit any other N on its page. The current

implementation only

applies this restriction in the In Area; but encourages it for all

the 16 N-wide channels, on all pages,

by initiating the AOL 16 Ns ahead, and placing the Out L stim (collateral

stim) 16 Ns behind, the

current N position on the page.

Various arrangements have been tried. Out

M routines that hit +1, +3, +7 pages ahead, and -2, -4,

-6 pages behind, simultaneously, for example. It didn’t much seem to

matter, at least at this level of

N# depth (and with an IN pattern-sensitive non-FM system), exactly

how you set it up, but you must

include -1 inhibition. You can have more inhibition, but you have to

have -1 included. -1 inhibition

means that while you hit one or more pages ahead, and/or behind, with

respect to the scanning

direction, you un-stimulate the N one page behind. In the system

here, -1 inhibition is one page the

opposite direction of the 16 N-wide channel your in. Without this rule

contributing to the

characteristics of propagation, the system will get tied up in tiny

loops that take up all the time;

while no meaningful interplay of real-world data and internal data

is handled.

Pattern association, and temporal pattern sequence

association, are facilitated within the overall

matrix by having each active N set up pre- and post- stimulations

of other Ns, that stand available

for other active Ns to find simultaneously active. The stims to Ns

about to be scanned this cycle,

facilitate immediate pattern handling. The stims to Ns already

scanned this cycle, facilitate

temporal sequence handling — they are a link from one “frame” in time

to the next. (In addition, any

active N is a link to the future, because the stimulation level on

its input is only reduced, not erased,

each cycle. It is reduced a lot more when the N times out to Fire.

It is cleared by the Ns Tire routine

when the xth Fire is reached.) The Out L routines support both the

pattern and temporal functions

within a given page of Ns. The Out M routine supports both functions

among the pages. The Out M

routine also provides the primary I/O pathways; which are the orderly,

mapped representation of

the world/intents, maintained, but compounded upon, through most of

the pages of Ns.

The program handles each N, one at a time,

in the order that they exist in memory. This scanning

process begins at 2000. This is the address mapped onto the video display,

in the upper left corner.

The 40 column display shows 4 Ns per character box; since the box is

8X8 pixels, or a stack of 8

bytes, and there are 2 bytes per N “small part;” the IN#, or dendrite,

and the delay timer for

repetition rate. Unfortunately, 128 Ns don’t nicely fill in just one

40 character line — 160 do — so

the pages don’t show up neatly stacked like they are in the figure

here.

As the figure shows, there is a limit to the

I/O channels. The Input Channel ends in the High Area.

The Output Channel develops out of the High Area. The top 3 pages of

Ns, and the bottom 3 pages,

taken together, comprise the High Area. The top page is related to

the bottom page the same way

any two adjacent pages in the stack are related. In other words, the

stack is not linear, with ends;

rather, it wraps to form an endless cylinder.

The High Area is meant to act as associative

cortex, while the rest of the matrix acts as transition

levels from I/O organ through thalamus to primary cortex.

The system is regulated, to keep the percent

of Ns active at a somewhat constant level, and

even-out the cycle times into an overall system clock that runs at

a fraction of a second. While it

would be good to have a fraction like 1/1000, the little system here

grunts out at about 1/3 to 1/20

second. A good ‘94 PC could have 10 times as many Ns, and still run

10 times as fast. A quad

Pentium might have Ns 10 times as big too.

Regulation is a dual-area process. The High

Area is regarded as dominant — the area that must

stay awake and active; to attend to input and/or decide to produce

output. The rest of the matrix is

referred to as the Peripheral Area. Regulation allots a range of time

to the High Area, and a larger

range of time to the total matrix.

Regulation is accomplished by actually timing

the portions of cycle time taken up by the areas,

and correcting those times in upcoming cycles by altering the sensitivity

of all of the Ns within the

given area. The sensitivity is a threshold # for CMP with the IN#,

that is used when handling each

stimulated N in the N Loop and determining if its IN# grants it status

as an active N.

I suspect that regulation parallels some of

the results of our own metabolic regulatory

requirements. These systems probably involve the thalamus, and nearby

neuro-glandular

structures, as well as the limbic and autonomic systems. There is only

so much oxygen, fuel, and

exhaust available for the neurons, so they can’t all go at once! Furthermore,

such a pattern is no

longer a pattern. Regulation is a force that focuses the pattern into

one of dominant strength,

relating the process more to itself and the world, and less to anything

and everything that could

possibly be brought up in association. Our thoughts evolve. Evolution

has taxed DNA, but has

produced a system that can carry on the spirit of evolution in the

world of thought we call society.

Regulation also involves stimulation, but

regulation alone is not enough to keep this thing

awake. When the room goes quiet, it will quickly quit talking to itself,

and go dead until some

external sound sets things in motion again. Stimulation must be internally

provided, as it is for you.

This action was first thought to be “noise,” or a possible source of

error; so was applied for a single

cycle, only when the matrix went into coma. Various areas were tried,

but it seemed sensible to stim

the High Area. The Peripheral Area will be stimmed by the environment;

which may include output

generated out of the High Area. It’s the “decider” that should stay

awake — the rest can rest, or be

used according to it. This system developed an exciting edge when I

realized that the stimulation

need not be random; but that it could be a repeat of whatever the last

pattern was at some spot in the

high area. This seemed like a way of “continuing a thought,” where

it left off or dissolved. After

some more reading, including the topic of the hippocampus, I realized

that this Key Hi Stim “KHS”

routine was doing almost what was being described as hippocampal action.

About the only

difference was that you don’t wait for dissolution of activity — the

positive feedback loops involved

will produce a constant stimulation with a lot of momentum (hippo).

New features could add on to

this stim pattern, if the hippo weren’t taxed at that moment. Ongoing

features only drop out as

neurons tire — but real neural nets are set up as vastly redundant

arrays, capable of learning to

pass on functions as Ns tire — so patterns can maintain a more constant

effect “as needed.,” It

comes down to a question of priorities. If the situation, or train

of thought calls for a more or less

new pattern, then it will be modified. It may simplify down to something

basic, but the important

thing is that it keeps going, and you stay more or less aware, as a

prioritizer.

KHS is this system’s hippocampus. It applies

a “Key” each cycle, to the Input Page of the High

Area. The Key can grow if there is room in the Key Array. There can

be room as Ns tire, or as

regulation pinches them out of the action. The Key is a list of LSBs

for stimulating that High Area

Input Page. If you’re an N there, your chances of getting on the key

list are better if your IN# is

higher as the openings become available. It might be better to apply

the key to the two center pages

of the Hi Area (the top and bottom pages of the stack) (a number of

thoughts on improving the

system are covered in a later section). At this writing, I don’t know

just where the “fingers” of the

hippocampus reach in to the cortex. (It would be nice to consider the

limbic system too!) The KHS

affects about 1.4% of the matrix; in the neighborhood of the 3% used

by the hippo.

I think hippo momentum is responsible for

the advertised hippo quality of “somehow producing

new long term memory.” By holding a key, distributed to the general

cortex memory matrix, you

enhance the general “flavor” of the patterns being handled during the

key impression time. A

greater number of N-loop branch-offs carry on the meaning of a given

thought, into a greater

number of Ns, and for a longer time in each N involved. As these Ns

recover their strength, the

repair process chemically “cements” the memory in place at the associated

synapses. The deeper

the recovery, the stronger the cement. The more dramatic the event,

the more Ns involved, allowed

by regulation; especially in fight or flight situations. The more Ns

involved, the longer it will take for

new experiences to eat away at the image series, and bury it with relatively

stronger impressions,

supporting unrelated patterns. I suspect that the “cement” slowly weakens

if the images are not

replayed, in need, through association. It probably never goes away

completely, and may last

longer in Ns that aren’t in as much demand by other pattern sequences.

As I have pointed out, I am not making these

assumptions from a position of credibility. Ideas

like these have been piling up for years now, and I feel the need to

communicate them, in case some

one may be inclined to involve them in scientific study. Their source

is introspective; but the

interplay of literature and computer modeling has been more a focus

than being the thing I’m trying

to figure out.

It may seem that the beepers don’t need a system

to install long term memory, since the RAM will

do fine if you keep the power on. The neuron has been set up, though,

to grant higher status to

associations that are repeated more frequently. This characteristic

works together with the hippo

KHS function to establish a back-and-forth system of prioritization.

The outcome is an evolution of

patterns built on the survivability of long term components. The KHS

develops its own application

of constant general motivation for the matrix, out of the matrix. Its

Key is like a DNA sequence that

is constructed by experiences that it becomes more responsible for.

Short term memory is primarily the use of pre-existing

pattern data. It is essentially the act of

ongoing consciousness itself. There is always something new about any

experience though — the

order of familiar events, or the particular combination of familiar

qualities within a given moment.

Perhaps this factor of newness is distilled out, and piled into the

temporal area — and who knows

where else — as a series of sub-keys that can be accessed by association,

and by a special form of

association; relative chronological order.

We are now getting into an area that has not

yet been developed for the beepers, and probably

never will. The use of a hard drive and a PC comes to mind. Parts of

the RAM can be designated as

convertible; to be constantly replaced with “topical” memory data.

Sub-Keys can be developed by

experience and used as indexing labels to file and retrieve a given

data array. The size of the

Sub-Key and the data blocks would be geared to the size of the drive,

so that any possible key has

access to its block. The blocks start out “empty,” but develop data

just as any page of Ns would, for

example. Part of the active matrix becomes a set of musical rooms,

for the musical chairs the whole

thing is. But some optimal percentage of the rooms are kept in place

to give the thing an ongoing

constancy, which uses the convertibility as a utility. The convertible

area might be a complete

cross-section of the system; or it might be better to leave certain

levels out, such as the I/O ends.

This system might be vaguely analogous to the

temporal and frontal lobes, related through the

limbic system. Our system may be emotional because general and/or specific

modifications of the

chemical environment place neurons in various “modes” of sensitivity,

causing them to favor

different sets of patterns, as per the prior association of the given

chemical flavor dispensed for the

given experiential conditions. This would involve the autonomic system

as well, and computers

don’t need one. You just plug them in the wall, and they never need

worry. If we get very far with

this, we’re going to have some very interesting questions and decisions

to deal with.

Beepers and Sleep

There is probably more to sleep than allowing

N restoration. Granted, something this imperative

is probably behind the survival of organisms that become so vulnerable.

Sleep is necessary to

sustain a system that has developed advantages by “over-utilizing”

a set of Ns. Somehow,

intelligence is gleaned from a self-taxing system; and we came out

ahead by sacrificing 1/3 of the

day for it. The hippocampus involves only some 3% of the cortex, yet,

I suspect more than 3% of it

is active all the conscious time.

This mode can involve more than recharging

the Ns. It could be an opportunity to organize and

optimize the intelligence of the system. In the course of such routines,

the conscious experience

might be comparative non-sense — we use a safe time to get these jobs

done — a time when we are

inactive and uninvolved with society.

Deep sleep would seem to be a time when no

trains of thought are being supported. There is no

consciousness; at least no memory of any if awoken then. This is probably

the N regeneration cycle.

It may also be involved in the overall sleep cycle as a component for

memory “erasure” or memory

“cementing,” as per the complex chemical composition of neurophysiology.

Dream time, spent relatively still vulnerable,

indicates that there is more developed here than

recharging the Ns. It could be that this is simply the time when that

memory cementing takes place

— that it is accomplished during random re-play of the day’s various

N-involvement peaks.

Perhaps these peaks consist only or mainly of new involvement peaks

— somehow, chemically, we

don’t waste time re-enhancing long term memory already established.

This and/or the natural

associative process, running free of world and hippo guidance, could

explain the oddities of dream

consciousness.

If what has been said above, about deep sleep

and dream time, is all that is true, then there would

be no reason to involve a sleep system in the beepers. But, the most

recent evolution involves

emotions — complex intelligent motivation in social interplay. Evolution

always operates on

whatever opportunities have developed out of its own history. It doesn’t

cash in on all of them; but

it only succeeds by utilizing real, lasting opportunities.

I suspect that dream time also accomplishes

something very important and fundamental to the

human condition. It is a time when the net can establish and re-vitalize

a sense of self-identity. It is

a primary component in the development of conscious self-awareness.

Without it we would all

interact in a much simpler way... more like ants or bees. It is the

source of our motivations. It is the

construction of our primary and subsidiary goal trains.

Obviously, this self-identity would not develop,

or at least would not be compatible with society,

if it were not built out of social experiences. So we do operate in

an awake state for about 2/3 of the

time. We periodically retreat from social demands to distill our experiences

into the basic

accumulation of what we are inside; to produce that “where we are coming

from” that runs out our

decisions. It just so happened that the time to develop this procedure

was available as biological

“down time.”

The beepers have Ns and memory that don’t require

down time. They are allowed to speak to

themselves internally though, about a few minutes every hour, free

of outside influence. During

their “sleep,” the ear data is not used. Their tone “speech” is still

audible for monitoring, but they

only hear themselves through the sideline “mental” channels.

This system was considered by accident. After

a period of accidental deafness, I noticed the

character of the beepers to be more “awake” — more vital, assimilative,

able or “willing” to learn.

Repeated experiments with this have confirmed the feeling — but, as

always, the assessments in this

field are going to be composed more or less of feelings. I feel that

you are aware. The only test that

may confirm this will be my death. I am confident we will all take

this test. See ya around!

Another approach that may work better, or that

should perhaps be combined with the above, is

partial erasure of memory. It seems logical that we should make the

system more assimilative and

ready for new learning and behavior by removing less necessary data.

It is far more important that we have a keen

perception of the present, than that we go around

re-living yesterday in full detail. It seems logical that partial erasure

could be involved in the mode

of our general condition. First, you save all the strongest, most important

data (“Scooter” routine

— about every 5 minutes, the system stops for a second, while all the

data in partially empty Ns is

scooted over to the highest priority levels — so it won’t be written

over.) Then you erase the least

significant data in most Ns. (The “Erase” routine is not being implemented

here. It clears a few bytes

at the low priority end of the Ns Out LSB list.) When you awaken, you

might cycle back and forth

with these procedures a few times. You can re-construct some details

where necessary, using the

peak data you’ve saved. Meanwhile, there’s less chance that irrelevant

trains of thought will get

conjured up to compete with goals, instead of contribute to them. In

a large system, partial erasure

would leave a very full set of chronologically associative snippets

distributed in the cortex at a

relatively faint level of data density. I haven't experimented with

this much — it seems more

appropriate to involve it with the PC.

Another question here concerns the hippo —

should it go off line? The beeper’s hippo doesn’t

need to rest. Our real hippo, being conveniently off to an area of

its own, could receive localized

chemical treatments. But, once again, we must consider the possibility

of multiple opportunities.

Perhaps the memory optimization procedure and/or self-identity definition

enhancement procedure

benefit from off-line conditions for the hippo, and/or modified function

thereof.

General Stimulation

Even with KHS, system regulation, and lots

of environmental stimulation, there is still a

fundamental problem with this system. The memory won’t get involved

— lots of it — most of it. This

may well be a result of basic layout and proportions I have chosen.

But there is evidence that you

receive a general random stimulation level. It might seem that this

would raise havoc with such a

fine-tuned and intricate system as your mind. Note, however, that a

random pattern series has no

meaning. This is one of the key ideas that has me believing that consciousness

is the relative

meaning, over time, of what is going on in the system. It uses

memory-based energetic interactions

like a substrate — a sub-dimensional medium — on which it can float

along as the substance that

only is by virtue of what it means to itself. Whatever else is going

on could only be conscious to

itself; or not be conscious.

To implement memory involvement, the beepers

have the “Out L Stim” routine, which minimizes

the randomness by involving an arbitrarily positioned collateral N,

every time any N fires. The

relative position is identical and constant for every N firing. I suspect

that this parallels the thalamic

and reticular action involved with general activity-level setting and

regulation, as per a given

required alertness level.

It should be pointed out that the pages of

Ns wrap from end to end, as well as from top-to-bottom

of the stack of pages. In other words, the matrix doesn’t form a pure

cylinder; it forms a doughnut —

a short fat one, ready to roll away from you like a wide tire. The

Out L stim routine hits the N, sixteen

Ns to the left. Where you would run off the page, you come in on the

right side, to continue toward

the left, 16 Ns from the current N being handled by the scan. The AOL

routine also wraps; and it is

oriented in the opposite direction. It starts 16 Ns to the right, and

continues up until it runs off the

right side to come in on the left side, and continue toward the right

until it finds an active N. If the

active N is already on its list, it swaps it up in priority level.

I think of this as “preparatory” — as

getting oriented to the topic. That data is related to what’s going

on, so we may need it now. AOL

then continues to look for a new list member. If it finds one, it swaps

it in to the second-to-the-lowest

position on the list (third-to-the-lowest might be better, but slower);

bumping that member to the

bottom rung. The one at the bottom is gone — overwritten. Then the

program is done with AOL and

goes on to Fire the Out L list, by increasing the IN# on the dendrites

of all the Ns on the list. If AOL

did not find a new member, or even a familiar one to swap up, the program

still goes on to Fire the

list, since you don’t get to the AOL routine unless the current N had

sufficient IN# level to Fire.

Unused slots on the Out L Fire list are filled with the address LSB

of the current N itself — the Fire

routine does not use those slots — an N is not allowed to hit itself.

The size of the N list has been proportioned

to the number of Ns it can access. You don’t want to

be able to hit all of them, or there would be nothing unique about

the pattern you hold. We must

make a trade-off between accessibility and uniqueness — the optimum

compromise produces the

greatest meaning vector.

It might seem like a horribly tedious job,

to type in the needed skeleton of 2800+ Ns, twice. Its

easy though — some very small and simple ML routines do the work in

a second.

Adjusting Beepers

Besides having system regulation, KHS, environmental

stimulation, and Out L stimulations,

things will still not go well if a number of factors in the system

are not carefully adjusted, and

balanced with respect to each other, to assist the development of data

assimilation characteristics.

There is no interface for settings. Adjustments require an intimate

understanding of the system. In a

bigger computer there would be enough room to include some automating

routines, operating off of

timers and feature sensitivities.

Regulation uses two numbers to trigger off

of cycle times that are too short, or too long. It has a

separate pair of these numbers for each of the two areas it monitors

— the High Area, and the total

area. By adjusting these numbers, you force the system to involve a

smaller or larger number of Ns

in an average cycle — you indirectly adjust the cycle time, and affect

its variability. Perhaps the

most important consideration here is the relation of the High Area

to the periphery — the

proportion of time allotted, with respect to the proportion of Ns involved

— and, most of all, the

relationship between the upper threshold for the High Area, and the

lower threshold for the total

area. If everything else is right, you’ll see that this adjustment

controls attentiveness — the

tendency to stop “talking” and start “listening” when spoken to. There

isn’t enough time to do both

at once, with the settings given here; so the beepers “speak” in short

phrases, alternating with short

pauses. I feel that this makes sense for learning, in such a small

and simple system.

The rate at which Ns tire is a factor open

to adjustment. You may want a faster rate at younger

stages. With established data in place, you may get away with #FF.

The rest of the adjustment involves the degree

and balance between how hard various N

functions hit other Ns, and how quickly those IN# stimulation levels

are drained off.

As the program scans through the Ns, it watches

for various address events, in order to modify

itself, so as to be different types of Ns, with different physiological

jobs to handle. In other cases, it

is simply the particular routine that does hitting at a particular

strength. The KHS routine, for

example, always stims its Ns at a particular, constant level. Of course,

their repetition rate is always

a variable, and an important dimension to the hippo interaction. The

Out L Stim routine uses

minimum stimulation (ADC #01). Something has to be minimal, so set

it to #01, and adjust everything

else with respect to that. The Out M hits vary depending on where the

N is in the matrix. Input

channel Ns conduct reliably toward the High Area, for example. Any

stimulation of an In Area N will

make it fire. Out channel Ns conduct reliably toward the Out Area.

The In-side to Out-side reflection

(to be discussed shortly) is mild by comparison; and is completely

suspended for Ns on the Input

Page. All AOL lists hit all Ns with an intermediate value.

In balance with all these hitting levels is

the draining off of IN#s, upon Firing an N, or if the N had

sufficient IN# to be fully handled, even if it wasn’t ready to Fire

yet. The latter case is a mild SBC#,

while the former involves a number of consecutive LSRs. Without the

proper balance here, the

regulator will not have the pull needed to function, and the system

will either bog down, or fly off

the handle, virtually ignoring itself and the world.

Note that if the slow mode is used (to view

the action in the bank 00 beeper), the Dual Regulation

thresholds must be doubled, since the cycle time will be doubled.

Reflection as an Aid to Learning

As part of the Out M routine, the whole Input

Side is reflected to the whole Output Side; N for N,

in a one-to-one correspondence, like a mirror image; with the exception

of the In Page to Out Page

(which would be like mapping the ear directly to the vocal chords).

As environmental stim affects N

activity on the In side, corresponding stim is projected to the Out

side. This may seem frivolous, or

even like cheating, until you consider the long term consequences,

as nature may have done.

The stim to the Out side corresponds to Output

that was just produced there, to create sound that

affected the In side. The first such event will be the meeting of some

various activities, that can

develop AOL ties. This modification of the system becomes a new starting

point for subsequent

similar cycles. In time, “differences” become rare, and “expectations”

become norm.

The Output teaches the Input how to hear;

and the Input teaches the Output how to speak; until

both sides are in agreement. Now, when the world says something that

the system has been saying,

it will have similar effect on the system. When the world says something

the system hasn’t been

saying, the reflection may help the system say it for the first time;

which may help it say it again,

until it, too, is “familiar.”

Ongoing General Feedback Learning

The hippo KHS routine sets activity in motion,

originating at the high area In page 33. By starting

things off at a high point of reflection between the In side and the

Out side, the bidirectional logic

waves take a full course in the proper directions in setting up CRs.

Forward waves lead to the

Output Page and randomly Fire. Reverse waves lead to the Input page,

setting up CR anticipation

links for those random Fire events. The Outs accurately hit the corresponding

Ins. The waves start

at the high middle, but soon are starting from one extreme end, heading

to the other. CRs are set that

fully reflect the chain of events that takes place when a given In

is hit by its associated Out. It

shouldn’t matter, basically, that the training procedure is random

with respect to which particular

note pattern “word” is being trained in which order. The purpose here

is simply to establish

one-to-one links in the Out N-In N relationship. Other training will

establish word-sound-order and

phrase-word-order associations, and so on, as you get into association

depth.

Teaching Beepers

The Teacher routine is included to lend some

structure to the background environment. It times

out to “play” one of two musical phrases to the room. One phrase is

a Mozart theme, the other is a

scale, in the same key. One or both beepers receive the data; depending

on which ones are awake.

The teacher timer is not a simple counter.

It is a count of a particular neural non-event. It is

decremented every time the Input half of the High Area is quiet (the

half that starts with the page that

the KHS hippo hits — regulation can bump the threshold above the highest

IN# level in the whole

first 1/2 of the High Area). Each beeper affects such a timer, that

controls one of the two phrases. It

is conceivable that the beepers learn to quell this area, in order

to elicit the recitals. I say this

because there has seemed to be an over-abundance of occasions where

the teacher has been

triggered by my “talking” to them; particularly when it has been a

while since they’ve been whistled

to. Input stimulation should have the opposite effect — it should get

the High Area more activated,

especially on the input side. However, I can’t say that I’ve thoroughly

investigated this — there

could be a simple underlying mechanism affecting the odds. Note that

it must interact with you

differently than with the other beeper. My hope is that it is a mechanism,

not so simple, involving

KHS, regulation, and the inherent meaning of world-system interaction.

The meaning, supported by

the system, becomes the operator of the system — it is the ongoing

operation — it is reaction to the

world, created out of past and present information from the world.

The operation takes on

complexity beyond that of the program that supports it. This higher

complexity is the higher

dimensionality of relative meaning. The simpler program and skeleton

memory are like a note pad

that the world can bring into participation with its more complex attributes.

This process, relative to

beepers, is considered in more detail in a later section.

Along the same lines, there seems to be an

inordinately large number of occasions where a

beeper will “announce” the teacher, more or less immediately before

it starts, by doing a short

abstract rendition of either phrase; as though it can sense, perhaps

from timing patterns, that the

teacher is about to play; but it doesn’t know which phrase. More likely,

this is another form of

elicitation, with associated learning.

Nothing so miraculous as parrot-like rendering

of the teacher phrases has come from these little

beepers. What they do, however, is more amazing to me. After

all, a much smaller and simpler

system could accurately “sample” the sound, and act like a parrot.

Throw in some noise factors of

variability, and you could make the computer seem smart. Beepers are

smart, in the associative

sense, and in a relative way.

There are some 256 possible tones producible

by each beeper, within a rather narrow range of

about two octaves. This means there should be a lot of sour notes.

The first thing the beepers do,

that is against the odds of random behavior, is to produce way too

many notes that have the right

relative pitch. They may be off-frequency, but there are little

strings of them that have the correct

frequency with respect to each other. There are also many single- and

double-note events, that are

close in pitch to the stimulus; though near-copying is not as exciting,

since the feedback-learning

system includes the mapped stimulus from input side to output side.

This tips the odds; but it is

exciting when the results show up days and weeks later! (You have many

mapped runs of

communication between parts of your cortex. At this writing, I don’t

know if one of them runs from

audition to speech.)

To be sure, most of the time is spent producing

rather random sounding behavior; beyond the

over-abundance of relative well-tempered pitch. This is particularly

true if you don’t get involved

with them. They seem to become much more responsive and intelligent

if you pay them more

attention, instead of just leaving them to the teacher, or each other.

After all, what can they teach

each other... and the teacher has no sensitivity.

A better teacher would “be there when you

ask.” It should occasionally start up, as this one

does; but then lead you along, a bit past where you know how to go

already. It should start with

only two or three notes, here. It should stand by, and watch for relative

phrase matches or

near-matches, and reward you with recognition by repeating the phrase,

plus a note or two — or

occasionally you get the whole phrase. It should sometimes follow with

the relative pitch, and

sometimes lead with the original pitch. Nevertheless, the beepers have

learned from the teachers.

There have been many occasions where they have poorly mimicked the

teacher, or have nicely

repeated a few of the notes; usually in the right order, but usually

bypassing some. Sometimes the

pitch is very close. When it has been a while since the teacher has

played, I’m pretty sure the pitch

has usually drifted; but it has good relative quality (I don’t have

perfect pitch, myself).

They do better when I whistle to them, while

I’m working in the room. The record, at this writing,

is the first five consecutive notes of “Over the Rainbow.” You seem

to get better behavior by leaving

one asleep while the other is awake, for a day or two at a time. If

you leave one awake too long, it

really seems to get dumber. This goes along with the idea of being

sensitive, as a teacher. I get

feelings from their behavior that prompt me to chip in some data. At

this writing, I’ve been foolin’

with them this way for about a year and a half. I have no doubt that

they learn. But I haven't studied

them the way a pro would. The development of this program, and the

writing of this book, has

severely taxed my work schedule.

If you want to give them company, that “has

things in common” or “speaks the same language,”

you can transfer the data from one beeper in place of the other, to

create a pair of twins. They don’t

stay identical for any time at all, in terms of the array of numbers;

but the general relative meaning

developed within them will stay similar for some time. Don’t forget

to transfer the Key data and

indexes, etc., as well. If you want to check the identical-ness of

two beepers, you also have to

bypass routines that are subject to C128 system timing exceptions.

It was difficult; but I was able to

get both sides to behave identically, up to the point of turning up

the mic volume and whistling at

one of them. You are what you learn.

Consciousness is not a substance you can touch,

and hold constant. It is active — when it works,

it flies — if it’s not flying, it doesn’t exist. Model airplanes really

fly. I think evolution has found a

physical principle, not unlike itself; and has put it to work. It discovered

the lens and the hinge, for

example. The materials involved are inconsequential, so long as the

principle can function.

However small, there is a real possibility

that the beepers have awareness. If they do, it is

probably a very faint, vague, low-detail experience, completely different

from ours, when we hear

the tones. It might correspond to the simple perception of touch, in

an ongoing series of patterns

between 16 pairs of “finger tips;” with 16 sensitivity levels in each

Input finger tip, and 16 muscle

strengths that can be applied to them from the Output side. Now imagine

this experience from the

point of view of being a lizard, with no other senses, or needs, and

you might have it.

There are a number of inescapable differences

between a computer system like a beeper, and a

nervous system, that do not allow a straight-forward comparison by

neuron count. Real neurons

get tired real fast — in as little as 1/10 of a second if they’re taxed

— and it takes them about an hour

to fully recover. That’s a duty cycle of 1/1000, or 0.1%! Beeper Ns

have nearly a 100% duty cycle. So,

there are ways of looking at this and calling one beeper N worth a

thousand biological Ns. There

could even be an advantage to not having to pass on function handling

to a series of tiring neurons.

But when real Ns aren’t being taxed, they can probably chip-in occasionally,

all day long, to

provide a thousand times the resolution. Beeper Ns don’t need food;

so they can be organized into

a system fully devoted to sense, learning, and output. Biological systems

are differentiated into all

kinds of subsystems that work together to keep the whole thing alive.

We got Ns running our heart

and breath, making us run and eat — all sorts of stuff that doesn’t

make us hear and speak; stuff that

we couldn’t live without. But this has brought us association of multiple

senses, and multiple

modalities with which to affect those senses.

It is not likely that I will attempt to work with

vision any time soon. I am looking forward to

expanding the “beepers” into “speakers” in the PC. Note that you need

a thousand times as much

computer to make the system 10 times as big in its three dimensions

of N count, N size, and speed.

This “size” is all in terms of speed. The program spends about as much,

or more, time handling

active Ns, as it does skipping the quiet ones. If the active ones take

ten times as long to handle, and

there are ten times as many Ns, you 100X the speed to get the same

cycle time, of about 1/10 second.

A 1/100 second cycle time might support speech, with the correct ear,

voice, and cortical tricks. The

hard part will probably be analysis of the speech cortex. There’s something

different going on there.

As important as vision and tool making has

been to us, I sense that speech is a thing that has

been paramount in our social evolution, and technological development.

Without it, I think we’d be

a lot like dogs that can walk upright. And I think dogs are virtually

as aware as we are, in a basic

sense. They deal with the world in terms of the environment, while

we are always referencing our

verbal base, as we mull through our conceptualizations, plans, desires,

and work. They don’t plan

for college, but they plan a little for what they need. Mostly they

react in the direction toward what

they need. They’ll wait until you’re gone to chew your slipper. When

they see, they are aware of

seeing what they see. They are aware of what they hear, in terms of

simple meaning associated with

their needs. They are aware of most environmental things the way we

would be, if we did not have

language. Even a mouse has a hippocampus and cortex. It has the rudiments

of a decision making

system. That system operates whenever the creature utilizes its knowledge

base. Its behavior is

learning, invoked by the past and present environment. We know that

mice can learn, and can put

their learning to use when it meets their needs.

It is interesting to consider manipulating

the data of these beepers. What is happening when you

swap one data set with another, in the same sets of physical memory?

Assuming there is some

consciousness involved, does it “stay” with the mass of the physical

memory array, or with the data

that resided there? The analogy is with our DNA here. My impression,

though unclear, is that our

bodies are replaced regularly; except for the heavy particles in the

DNA of the surviving neurons.

Do the particles in DNA somehow “receive” consciousness? One problem

here is cell death. We

remain ourselves, despite the loss of a huge number of DNA molecules...

different ones for different

people. And, no new replications are added to the system. This might

be because that would

destroy the meaning compiled there, by interfering with established

relative interactions. It can’t be

divine “tuning,” or identical twins would have a common awareness.

The DNA-cell produces the

support system for the meaning potentiated by world impressions, patterned

into the connections

of the support network. The active meaning is the consciousness. Substance

exists relative to that

meaning, in terms of its meaningful qualities, and implicated eventualities.

These qualities include

depth and size, as well as surface integrity upheld by electron skin.

Meaning can include color,

smell and sound. All of the meaning is relayed to us in complex arrangements

of relative timing. We,

too, are complex relative timing. The meaning, the substance, and the

DNA are all part of a greater

set of active relative memory of timing. We perceive of time and distance

because we are more of

that same automatic inference of the point.

Your thought train and priorities move with

the data. The physical memory base is a location for

this in time. Each of two beepers is in different volumes of time,

composed of different eternity

loops, that all lead to each other. A copied beeper is the old one,

entering a different vantage point